Introduction

David Thomas and Andrew Hunt claim in The Pragmatic Programmer that you should “Treat test code with the same care as any production code. Keep it decoupled, clean and robust. Don’t rely on unreliable things [...]. Testing for these sorts of things will result in fragile tests.”.

In essence, they claim that the unit tests are equally crucial to the success of the code. Unit tests enhance the implementation's design, but they also make it easier to later refactor and add new features by lowering the likelihood that something will break without being noticed. We can conclude from this that unit tests can significantly raise the caliber of our solution. But nevertheless, all unit tests carry out that?

The answer is definitely no. You will enjoy the benefits of having unit tests only if they are well written. Otherwise, you’ll end up wasting time to correct them while changing your solution and also, with testing and testing all the added functionalities manually because of the lack of confidence in the accuracy level of the unit tests’ results.

I'll outline a few techniques in the following section of the article that will enhance the caliber of the unit tests you create. The main concepts are applicable regardless of the technologies we adopt, even if I included a section that makes reference to the Karma test runner or the examples written using Jasmine.

1. Do not "over test"

Especially when I was writing UI unit tests, I frequently felt the urge to test everything. However, trying to check every single piece of text on a page does not guarantee that your unit tests will be more robust as a result.

A very common met example is represented by checking the entire texts displayed in the interface. In the next example I want to test that the title of a web page is displayed. The title is formed of two parts:

- a static one: “Hello, ”

- a variable one: the full name of the logged in user

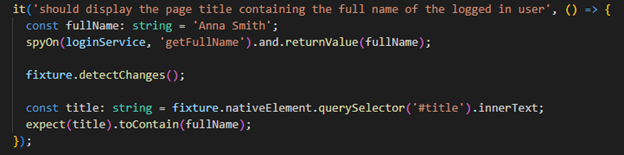

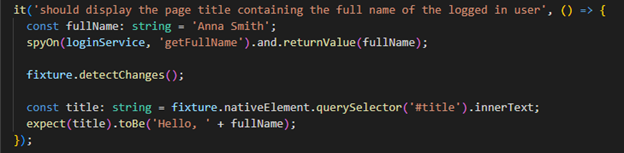

In image 1 - 1, it is checked that the title contains the full name while in 1 - 2 it’s verified the entire string. We only need to check that the displayed text contains the variable parts. Verifying that the title is an exact hardcoded string brings no value to that unit test, but it increases the chances to get it failing when actually the functionality is perfectly working, just the shown text being changed. In case the title wouldn’t have a variable part, I would only check if the string is not null or empty.

1-1

1-2

Nevertheless, it is a situation in which I would verify the entire text and this is when the project includes resource files containing the texts used in the interface. It’s safe to use the strings in those files to compare with the actual values displayed in the UI because if any change occurs, it will be done in a single place, in the resource file which is used both, in the implementation and in the unit tests. In this specific case you have the certainty it won’t be any false alarm generated by the unit tests.

2. Configure the test runner to fail if a unit test doesn’t have or reach the expect statement

It is well known that a unit test should verify just one test case, which would result in just one assertion, in a single test. However, there are situations when it happens to have multiple assertions in a unit test. Assuming the environment allows, you should never forget to configure it to fail the unit test if it doesn’t reach all the expect statements or if there is none.

The risk in this situation is to believe that your functionality is completely tested, all the unit tests are passing, but actually that portion of code is never verified. If you don't notice soon enough, your unit tests’ robustness and quality are decreasing. I bet that unit test will be left there as it is, checking nothing, sufficient time to forget what that unit test was about.

Karma allows you to fail all the tests that don’t have expect statements. By default a spec that ran no expectations is reported as passed. Setting failSpectWithNoExpectations to true in the Karma configuration file will report such spec as a failure.

3. Simulate user interaction

From my perspective, when considering the scenarios on front-end unit tests, one crucial technique is to imagine yourself as a user of the application. Instead of relying solely on testing the logic, gather the html elements and base your simulations of events on them.

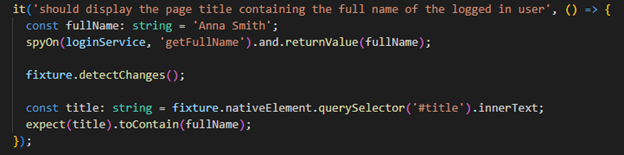

A relevant and simple scenario is the one for testing what a button does. The first step is to identify the selector for the button and get the element. Then the correct next move is to simulate the event that you need on the html element. If you look in the image 3 - 1, you can see that the first two steps done are the exact ones reminded previously. In this specific case, the event that we need to simulate is a click. The last part is the assertion section where we check if the method behind the button was called and returns the desired result as a consequence of the click event.

3 -1

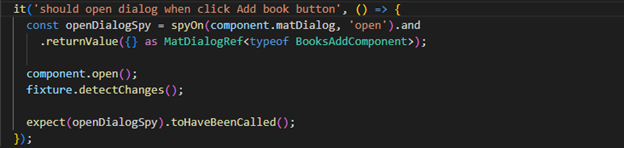

Unfortunately, I wrote and I also found in the existing code of the projects I worked on, unit tests similar to what we can see in image 3 - 2. It’s clear that in this unit test, the first part in which we get the html element and simulate the event was skipped. It is directly called the method behind the button which it doesn’t really test much. We verify that the implementation of the open method opens a dialog, but we won’t know if the html elements are correctly linked to the logic behind.

3 - 2

4. Do not assume that code and branch coverage that tends to 100% will assure the unit tests’ quality

I've been assuming for a significant amount of time that the quality of the unit tests is also quite high if I check the coverage metrics and make sure I keep them between 80 and 100 percent. Nothing could have been more wrong, it was revealed. A low coverage percentage does indeed indicate that you are testing your code insufficiently, but high numbers do not, and more importantly, do not accurately reflect the effectiveness of your unit tests.

Let’s look at the example in image 3 - 2 in which the method behind a click button is programmatically called. This represents a good model for this section because after running it, the metrics will say we fully tested the open method, but based on the explanations above, this isn’t a conclusive unit test at all.

Another example as well, it’s the unit test from 1. Do not “over test”. If we do not include this unit test in our project and the title does not contain variable parts computed in the ts file, we would not even be aware that we have not verified the title is displayed correctly.. The coverage report will say that all the branches, statements and lines in that method were covered. So, the metrics are not affected by this unit test in any way. This makes us understand that even if we have 100% code and branch coverage, we may not test every scenario that we need.

Finally, think of the coverage metrics as a warning system when you test insufficiently but not as a guarantee that you have tested everything when it is high. And most importantly, do not fool yourself by not configuring the unit tests to fail in case of assertion missing and then intentionally omitting the expect statements in order to get all the unit tests passing with high coverage

5. Write the unit tests while developing

Although there are differing opinions on this subject, in my opinion, unit tests should be written while the development process is still ongoing rather than after it is complete. It may be annoying to write unit tests while developing, especially when you don’t have much experience with them because they are time consuming and sometimes not that interesting as the development itself, but it brings a lot of benefits. In the end, it will save you time later.

While the changes are still fresh in your mind, writing them as early as possible will help to keep the code cleaner and find bugs right away. The time to correct the implementation and the costs of the fix will be definitely lower than if they are fixed later on, after the code gets in production.

Conclusion

Don't undervalue the importance of well-written unit tests, and last but not least, don't treat them lightly just to meet metrics or a predetermined number of code lines. At first, I believed that the main objective was to create as many unit tests as possible, sometimes ignoring the fact that they were useless and, of course, not taking into account all the practices I discussed in this article. Looking back, if I had spent more time learning and improving the unit tests for those applications, I could have saved myself some time now when I needed to fix a bug or add new functionality.

I'll conclude this article by saying that we should always keep in mind that the goal of writing unit tests is to improve the quality of the software. If you think otherwise or you want to share with me some of your experience, you can find me on LinkedIn.